vi. Pillar Three: Workloads

Pillar Three: Workloads

Strategy and Vision created the aspirational, architectural, and programmatic framework for our AI strategy; Ecosystem Architecture built the infrastructure, data, and application foundations. No doubt you have noticed that we’ve not yet discussed any specific AI-driven workloads. Take this as a lesson concerning the foundational nature of data and your data platform to any big dreams you have about artificial intelligence, for the success of any AI workload is absolutely and critically dependent on your success with strategy, vision, and – particularly - ecosystem architecture discussed above.

Our Workloads pillar gets to what’s on the mind of most folks when they think about artificial intelligence: How will we use AI to solve real-world challenges?

“Workload” is not a throwaway word. It is rather a specific term that we use precisely because I value its imprecision. App-centric people speak in terms of apps, integration-centric people speak in terms of integrations, etc. Workloads cover it all. They are, simply put, a collection of one or more apps, chatbots, visualizations, integrations, data models, etc. working towards the same end. “Workload” is essentially the combination of the front-end and back-end required to produce an AI-driven response or action.

This pillar broadly addresses three topics:

Identifying and road mapping the best candidate workloads for development via Workload Prioritization;

Understanding the spectrum of different workloads through which AI can be used, and why balancing your portfolio across Incremental AI, Extensible AI, and Differential AI is so important;

Enabling the organization’s Power Users - also called “Citizen Developers” or “Communities of Practice” - to extend and even develop AI capabilities for themselves.

Workload Prioritization

We (the authors) often say that “We’re not concerned with one app. We’re interested in one thousand workloads.” Maximizing the use of AI throughout an organization, truly weaving it into the culture and ways of doing business. That’s how we achieve real value - how we maximize return on investment - in artificial intelligence.

Our goal in Workload Prioritization (also known as “workload road mapping” or “app rationalization”, depending on which circle you’re running in) is to create a prioritized roadmap of specific workloads to be modernized with an infusion of AI or built anew to solve an emerging problem or a challenge whose solution may have been out of reach without AI. This prioritization is an indispensable part of an organization's ongoing AI journey. Prioritization results in a workload roadmap, a backlog of workloads that are candidates for development with AI capabilities. It allows the organization to project AI’s business value over time, and is a core driver of return on investment (ROI).

There are many techniques and patterns through which you might prioritize and re-prioritize workloads, including:

Alignment of the workload to the desired guiding principles or outcomes defined in your executive vision;

Legacy location in that where the workload lives today, e.g. evacuating the data center on the third floor of your headquarters building may present an excellent opportunity to rebuild previously on-premise workloads to be “AI native”;

Legacy technologies, similar to “legacy location” above, but related to technologies you wish to sunset rather than locations you wish to evacuate;

Telemetry such as monthly or daily active users, last active use, data volume (remember to consider both structured and unstructured);

Security or compliance risks that exist in whatever systems or processes you are currently employing relative to a given workload (i.e. is our current model too risky for the organization?);

Target technologies in that you may highly prioritize workloads that are targeted for the same or similar technologies, i.e. if you’ve just made a significant capacity investment in a particular AI development tool on which you’d like to maximize the return;

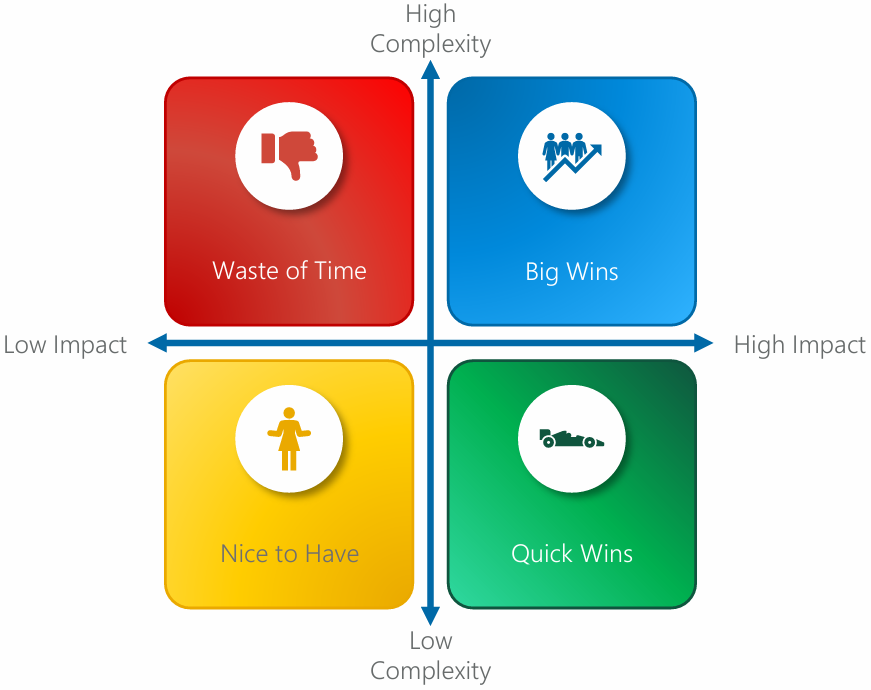

Qualitative Assessment wherein we compare each of a series of workloads’ relative impact or value to the organization with the complexity (technical, organizational, political, budgetary) of implementation to determine which workloads are big wins, quick wins, nice to have, or wastes of time.

There are likely more considerations, but these are our favorites. “Qualitative Assessment”, in particular, offers a relatively quick and effective way to elicit real business challenges from colleagues and to then prioritize them so that we can make investment decisions as to which AI workloads we will invest in next.

Figure 21: The qualitative method for workload prioritization asks stakeholders to make qualitative judgements as to the relative impact or business value and complexity of implementation for each workload.

The roadmap is pivotal to ensuring that there is a flow of workloads inbound to the AI and data technologies in which we’ve invested, and that the organization is focusing its development resources on the workloads that will provide the highest value once deployed. In short, a healthy roadmap allows the organization to:

Continuously decrease marginal costs per workload whilst increasing overall return on investment;

Provide a trajectory of anticipated usage so that capacity can be tapered up over time;

Achieve quick wins and big wins to justify early and long-term investment;

Focus development resources on the most valuable workloads;

Avoid costly development quagmires by de-prioritizing the least valuable or riskiest workloads.

We must therefore work directly with business stakeholders, users, and IT to identify, prioritize, and categorize candidate workloads and to create a roadmap for building them. These candidate workloads should be prioritized and re-prioritized on an ongoing basis so that we remain focused on the most important next efforts.

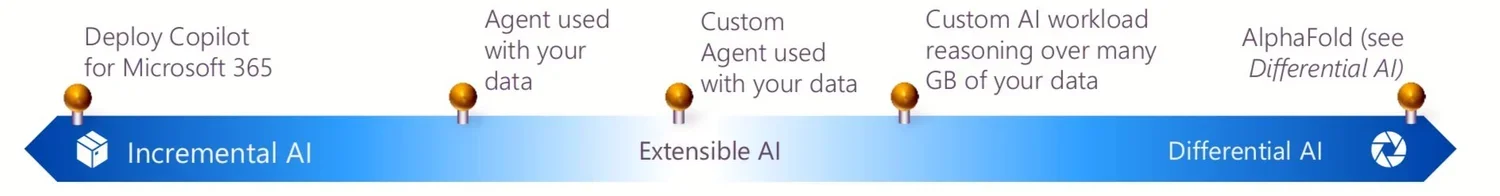

Finally, it is useful to categorize each AI workload on your roadmap as incremental, extensible, or differential

There is some risk here that specific AI workloads won’t work as their creators intended. No, we don’t mean that the AI will turn evil and obliterate its makers, rather that because we are (still) in the early days and because the technology’s true capabilities are not entirely known, organizations may find that a particular AI-driven workload just doesn’t produce the hoped-for results either because it lacks the data it needs or because the technology just isn’t ready for what it’s being asked to do. Wise organizations can therefore hedge this risk in several ways:

Balance your roadmap of AI workloads in development between incremental, extensible, and differential. Think of this as a spectrum where incremental incurs the least risk to success and differential incurs higher risk;

Relatedly, balance your roadmap of AI workloads in development between those that are medium-to-high impact but low complexity, as well as those that are high impact and high complexity. In other words, take a few moonshots, but balance the risk of your moonshots not panning out by also investing in less complex yet still valuable workloads. Distribute risk across your workload roadmap;

Make measured, incremental investment, and evaluate progress regularly. This is not the space for lengthy IT projects with big bang go-lives at the end. Instead, develop these workloads such that each sprint makes observable progress, preferably solving specific development challenges such that you either (a) build confidence in the solution as you move towards a minimum viable product, or (b) can quickly recognize when it’s time to pull the plug and invest elsewhere;

Augment your AI development with expertise, particularly around specific types of workloads where others have made good progress. Partner with others outside of your own firm, learn from the successes and failures of others, and don’t be afraid to learn and share;

Educate stakeholders and investors; set their expectations. AI can seem magical, but it is not magic. “Quick wins” are not particularly attainable if you’ve not done the hard work upfront around your data platform, and it’s still difficult for almost everyone to prognosticate about outcomes in such an unknown space.

Finally, many organizations will want to conduct a business value assessment (BVA) to make a business case based on specific goals and expected outcomes of their Power Platform adoption generally, and their development efforts for important and critical workloads specifically. Such assessments allow mature organizations to determine and make sound financial choices around considerations such as return on investment (ROI), net present value (NPV), and other industry-specific factors.

Incremental AI

Incremental AI is a broad, conceptual category of workloads in which AI is applied to bring speed, efficiency, scale, accuracy, quality, etc. to activities that a human would have otherwise performed.

Microsoft’s Copilots generally fall squarely into this bucket as they help their end user to reason over information more granularly, identify highest-potential sales targets more accurately, create content more quickly, book appointments more efficiently, write code more effectively, recap meeting action items easily, etc. These use cases tend to share two things in common:

They apply artificial intelligence to scenarios that were already being performed by a human, and would have gone on being performed by a human with or without AI;

They are often* performed in support of the organization’s overall purpose, not as the organization’s primary function.

* Big asterisk here, so let us explain what we mean by comparing the “book meetings more efficiently” and “write code more effectively” examples above.

In the case of the former, organizations do not typically exist for the purpose of having meetings (though there are days that this would feel like revelatory news to many of us), so booking an appointment is a necessary task which creates the medium through which a service is provided; but is not the service itself. This is a typical scenario for so-called incremental AI, that AI acts as a “Copilot” helping the human cut through various tasks to get to the real point of their work. At the risk of being called out here, we will acknowledge that there are some cases - such as AI writing code for a developer at a software company - where AI is actually creating the products or rendering the services that are the ends, rather than a means to the ends. It’s a bit of a semantic discussion, but worth keeping mind as you organize your thoughts.

In any case, the central question to ask yourself, your colleagues, and those formulating your AI strategy is, “Which activities currently being performed by humans could we make better in ABC ways by turning some or all of the task over to AI?”

These answers will often come easily, but it’s worthwhile to cast a wide net to ensure you’re maximizing the potential of AI in your incremental workloads. Consider…

They apply artificial intelligence to scenarios that were already being performed by a human, and would have gone on being performed by a human with or without AI;

They are often* performed in support of the organization’s overall purpose, not as the organization’s primary function.

* Big asterisk here, so let us explain what we mean by comparing the “book meetings more efficiently” and “write code more effectively” examples above.

In the case of the former, organizations do not typically exist for the purpose of having meetings (though there are days that this would feel like revelatory news to many of us), so booking an appointment is a necessary task which creates the medium through which a service is provided; but is not the service itself. This is a typical scenario for so-called incremental AI, that AI acts as a “Copilot” helping the human cut through various tasks to get to the real point of their work. At the risk of being called out here, we will acknowledge that there are some cases - such as AI writing code for a developer at a software company - where AI is actually creating the products or rendering the services that are the ends, rather than a means to the ends. It’s a bit of a semantic discussion, but worth keeping mind as you organize your thoughts.

Turn on AI you already own (or can easily acquire): Implementing Microsoft’s extensive (and growing) range of “Copilot” products that are likely to be most impactful to you.

Ask your people: Directly ask end-users and line of business owners to identify pain points in their work and their wish list for AI assistance.

Rationalize your workloads: Consider AI capabilities as part of any app rationalization or workload prioritization exercise you conduct.

Mine your processes: Use tools like “Process Advisor” in Power Automate to ID pain points in business processes that AI could mitigate.

Engage with your peers (and competitors): Keep your ear to the ground with competitors, “friendlies”, and industry groups to understand how they’re using AI.

This is ultimately a workload prioritization and road mapping activity (reference the earlier Workload Prioritization dimension), so we recommend having a look at Andrew Welch’s “ One Thousand Workloads ” piece from several years ago (which addressed road mapping for Power Platform but is also quite applicable here), as well as more recent thoughts on “Workload Prioritization” which you’ll find in his “Strategic thinking for the Microsoft Cloud ” piece from early 2023. The workloads that make it onto your roadmap should absolutely be included for implementation as part of your AI strategy.

Extensible AI

Though it barely registered as a concept in early 2024, by late 2024 it has become apparent that Extensible AI may be where the bulk of an organization’s AI workloads are categorized in the coming years.

Admittedly, there is a lot of grey area here, and in any case, the spectrum of incremental, extensible, and differential workloads is really meant more as a conceptual framework than any hard technical boundary. That said, extensible AI occupies the broad middle range where more incremental workloads are extended to suit an organization’s specific scenarios.

Figure 22: Incremental, Extensible, and Differential AI workloads provide a conceptual framework—a spectrum—for evaluating an organization’s portfolio of investment in AI workloads. Health portfolios are well balanced, though different organizations and industries will have varying risk tolerances.

For example, consider the scenario above where an organization has consolidated a store of its proprietary data and desires that AI reason over this data to produce generative responses via a chat-based user interface, including directly through Copilot for Microsoft 365. This would have required engineering a bespoke RAG-based workload even as recently as early 2024, complexity that would have landed this scenario squarely in the realm of Differential AI.

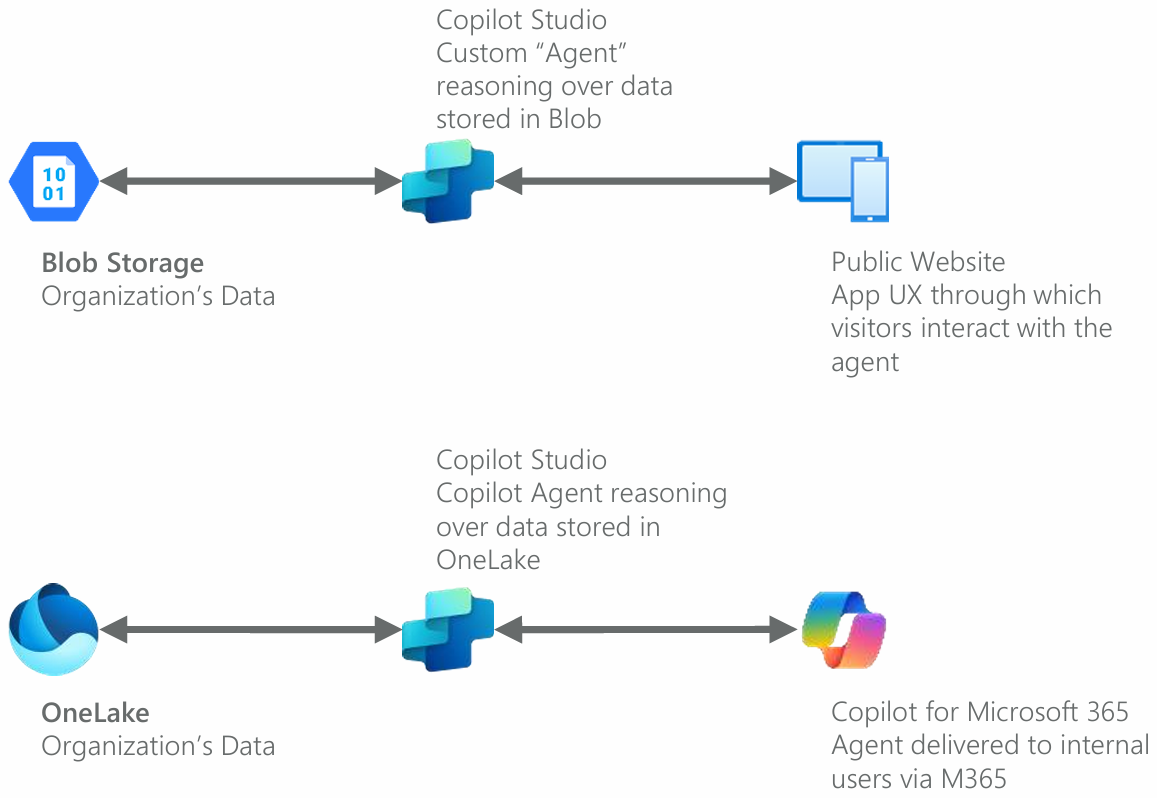

Figure 23: Simplified models of typical Extensible AI scenarios.

Developers can extend many Copilots using their organization’s data to further enhance the functionality of these Copilots, making them even more relevant and effective for specific business needs. Copilot Studio is increasingly purpose-built for just these types of scenarios, so if you’ve not read more of the AI Developer Tools dimension, this is a good segue to that earlier topic.

Let’s now discuss several other key extensibility tools:

Graph connectors allow data from external systems to be indexed and made searchable within the Microsoft ecosystem. By integrating these connectors, developers can extend the capabilities of Microsoft Copilots to access and utilize data from a variety of sources. Graph Connectors use APIs to crawl data from external sources such as file systems, databases, and SaaS applications. This data is then indexed and made available within Microsoft Search experiences. Consider a company that uses both SharePoint and an external CRM system. By creating a Graph Connector for the CRM, employees can search for customer data directly from their Microsoft 365 environment, enabling seamless access to critical information;

Teams message extensions provide a way to extend the functionality of Microsoft Teams by allowing users to interact with your services and data directly within Teams messages. They can be categorized into Search Message Extensions and Action Message Extensions. These extensions allow users to search for information from external systems and insert the results into a Teams conversation:

A Search Message Extension could be developed to allow employees to search for knowledge base articles and insert relevant excerpts into a Teams chat, facilitating quick access to helpful information during discussions;

Action Message Extensions enable users to initiate workflows or perform actions based on message content, for example, allowing users to create a new task in a project management tool directly from a Teams message, streamlining task management and reducing context switching;

API plugins (in preview as of fall 2024) enable developers to integrate third-party APIs with Microsoft Copilots, allowing these intelligent assistants to interact with external services and retrieve data in real-time. API plugins involve writing custom code that calls external APIs and processes the returned data. This data can then be used by the Copilot to provide informed responses or perform specific actions. A Copilot could be extended with an API plugin that retrieves weather data from an external service. Users could ask the Copilot for the current weather conditions or a forecast, and the Copilot would provide accurate, up-to-date information;

Copilot Studio Agents allow for the definition of Copilot behaviors through configuration rather than code. This approach can simplify the process of extending Copilots and make it accessible to non-developers. Copilot Studio Agents use configuration files or low-code tools to define how they should interact with users and data sources. These configurations can specify triggers, responses, and integrations with other services. A Copilot Studio Agent could be configured to monitor a SharePoint list for new entries and send a notification to a Teams channel whenever a new item is added. This setup requires minimal coding and can be quickly adapted to changing business needs;

Teams AI Library provides tools and frameworks for building intelligent bots and Copilots within Microsoft Teams. By leveraging these resources, developers can create sophisticated, AI-powered assistants that enhance collaboration and productivity. The Teams AI library includes pre-built models for natural language understanding, tools for building conversational interfaces, and integration capabilities with Microsoft Graph and other services. Using the Teams AI library, for example, a developer could create a Copilot that helps employees schedule meetings. The Copilot could understand natural language requests, check participants' availability, and suggest suitable meeting times, all within a Teams conversation.

The bottom line of extensible AI, though, is that architects need to quickly transition from making black and white assessments of whether a pre-built AI tool such as Copilot for Microsoft 365 can do the job, or if an entirely bespoke AI development is needed to increasingly embrace extensibility scenarios that fall in the broad middle of the spectrum. In other words, seek first to extend, then consider entirely custom development.

Differential AI

We’re finally on to what popular culture might consider the “fun” bit, or at least the bit that dominates the imagination when it comes to artificial intelligence’s supposedly boundless possibilities. Whereas Incremental AI includes the scenarios that improve upon solo human performance of activities that would have been performed anyway, Differential AI broadly encapsulates workloads that would not have likely been performed by humans alone, scenarios that are valuable to the organization because they allow you to jump out ahead of your competition, to offer your customers something that you’d not have otherwise been able to provide. We once toyed with the idea of calling this “Secret Sauce AI” or “Moonshot AI” to underscore the point.

Hallmarks of these differentiating, accelerative workloads are that they require a degree of creative thinking to dream up, can be challenging to implement, often involve deriving insights by mixing data that you already own but never had the ability to co-mingle, and operate along a time dimension, that is to say, involve some sort of computation or connection that must be completed within a window of time that makes human intervention more challenging.

On top of this, these workloads will require a degree of flexibility on your part, at least in the early days as you figure out exactly how to harness the power of this newfangled thing you’ve built.

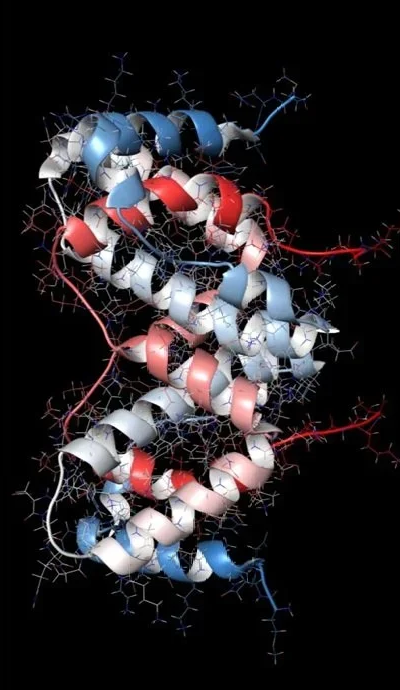

AlphaFold , developed by Alphabet’s DeepMind lab to make predictions about protein structure, is one of the world’s more successful examples of this type of differential AI. We will not - nor are we qualified to - dig too deeply into the biological particulars of what the thing does, but we will draw on some incremental AI to summarize it for us below.

“AlphaFold AI is a groundbreaking deep learning system designed by DeepMind that brings tremendous advancements to the field of protein structure prediction. By harnessing the power of neural networks and sophisticated algorithms, AlphaFold AI analyzes amino acid sequences and accurately predicts the three-dimensional structure of proteins, revolutionizing our understanding of their functions and enabling significant breakthroughs in drug discovery, disease research, and biotechnology. Its exceptional precision and speed promise to reshape the way scientists study protein structure, opening up new possibilities for tackling complex biological challenges and accelerating scientific progress.”

- A slick bit of incremental AI (ChatGPT)

In theory, many humans might have spent many years working this out in the absence of artificial intelligence. But this would have been impractical, if even achievable, and in this way AlphaFold serves as a (ridiculously brilliant) example of differential AI offering a benefit to its creator far beyond what would have otherwise been possible.

That said, differential AI need not offer groundbreaking promise for the future of humanity to be useful to one organization, team, or even to an individual’s world of work.

Figure 24: The practical examples discussed here each, in their own way, assimilate and reason over a combination of proprietary customer information, internal documentation, unstructured data, publicly available data, consolidated data stores, and observable characteristics of the physical world to produce a response or outcome.

Let’s consider some practical examples below.

Professional Services

For example, a law firm, accountancy, or consultancy might use AI to produce regular guidance advising its clients of upcoming regulatory or legal changes that may impact their business in the various jurisdictions in which they operate. A typical firm would know who its clients are, in which countries and sub-national regions (e.g. states, provinces, territories, counties, autonomies, etc.) its clients operate, and possess data concerning its clients' products or services. This proprietary information could be used in conjunction with (a) publicly available information and (b) internal documentation - indexed by Azure AI Search, of course - concerning regulatory or legal changes taking effect over (say) the next 6-12 months to produce tailored guidance for individual clients.

Public Sector

A public sector law enforcement or foreign affairs organization might possess information in one quasi-consolidated data store that Firm A is owned by Firm B, which is itself a shell company owned by (say) a sanctioned Russian official. Another quasi-consolidated data store, or some selection of unstructured data may also indicate that Firm B owns a yacht that the global automatic identification system (AIS) knows is presently docked in an allied port. This serves as a great example of, among other things, workloads with a time dimension. A human could connect these dots, but it is less likely that a human could connect all these dots in a potentially narrow window of time during which that particular yacht was docked in that particular port.

Agriculture

An agricultural firm depends on phenotyping (observing and analyzing an organism's observable traits or characteristics to gain insights into its genetic, physiological, or environmental attributes) to breed and produce new vegetable varieties. This extremely laborious process can be significantly enhanced by image analysis assessing thousands of images per minute.

…and on it goes.

There are clearly overlaps between incremental, extensible, and differential AI workloads. Think of these as conceptual categories meant to help you place them in context of your AI strategy (rather than hard technical boundaries). The three examples above share, however, that they move their organization’s use of AI from that which betters or enhances something that would have been done anyway to the realm of achieving results that could not have (realistically) been otherwise produced.

As AI technology evolves, though, it seems certain that the line between these different flavors of AI workloads will blur further. We will move from “bolt-on” architectures and user experiences wherein AI is grafted onto pre-existing workloads or used in legacy form factors such as “the app” to “born-in-AI” solutions that have been architected from the ground up with AI in mind.

There is no perfect historical comparison here, but let’s illustrate the point by thinking back to the early days of smart watches. When this new form factor first became available, many of its early applications simply shrank that which was available in the mobile phone form factor onto a tiny, wearable screen. These apps were predictably terrible until developers began thinking of the wrist as an entirely new form factor with different constraints and opportunities.

AI represents a much more fundamental paradigm shift than a screen that’s similar to other screens but smaller; running apps that are similar to other apps but on your wrist. Suffice it to say, though, expect the discipline of born-in-AI workload design to mature in the years ahead until the industry at large accumulates enough experience to really understand what’s possible, what works, and – importantly - what doesn’t.

One can get a sense of where this may be going in the form of Project Sophia , which Microsoft describes as aiming “to help our customers solve complex, cross-domain business problems with AI, by enabling them to interact with data in new ways and answer strategic questions that drive better outcomes.” There’s no way to know if this is a form factor that will stick, but Sophia’s very existence points the way towards an era of creativity where even the future of the venerable “app” is up for grabs.

In any eventuality, it seems likely that those of us whose idea of what computing can be was shaped by our years growing up watching the crew of Star Trek verbally converse with “the computer” and be handed back responses that we’d now call “generative” will not have to wait long to see these dreams become reality.

What differential AI looks like in any specific organization is, well, specific to each organization. This of course risks spiraling into its own form of the use case death spiral wherein fixation on an endless stack of workloads prevents action on workloads one, two, and three. Differential AI also takes us into the realm of trying to solve today’s problems with tomorrow’s promises, reference one of our original, foundational principles:

“Any future-ready AI strategy must be flexible, meaning it is able to absorb tomorrow what we don’t fully grasp today.''

Your differential AI workloads ought to be baked into your AI strategy’s roadmap from the start, shepherded by technology and business leaders with a healthy tolerance for flexibility.

Power Users

Power Users are pivotal in the successful adoption and scaling of AI technologies within organizations. They are typically advanced users who leverage AI tools beyond their standard functionality, often discovering new use cases and pushing the boundaries of what is possible. By acting as early adopters, Power Users not only validate the utility of AI tools but also drive their evolution by providing critical feedback. They represent a bridge between the general user base and technical teams, translating complex AI functionalities into practical, everyday applications.

We’ve included this dimension as a part of the Workloads pillar because these power users should be enabled to (a) evangelize and promote the use of AI workloads amongst their colleagues and teams, and (b) develop AI workloads - particularly Extensible AI workloads themselves using tools like Copilot Studio.

To further bridge that gap, it is important we introduce yet another concept: Communities of Practice (CoPs).

Communities of Practice (CoPs) are vital in embedding AI into the organizational culture. These communities bring together individuals across various roles and departments to share knowledge, best practices, and insights about AI tools like Microsoft Copilot. CoPs facilitate continuous learning, enabling members to stay current with AI advancements and to collaboratively solve challenges related to AI adoption. By fostering a culture of open dialogue and experimentation, CoPs help organizations navigate the complexities of AI, making its deployment more strategic and effective.

Organizations must provide access to the right tools, training and resources that enable Power Users and the CoPs they lead. In other words, investing in them and setting them up for success by establishing a robust support framework that covers everything from understanding data security within AI contexts, getting skilled up with a selection of copilots and, or course learning the art of prompt engineering.

It sounds like a lot, and that’s because it is, so to maximize the impact, and avoid potential chaos, organizations should:

Establish clear roles: Define roles and responsibilities for specific Power Users and CoPs to clearly define their focus within AI initiatives. Examples include testing new features, providing feedback or mentoring other users.

Facilitate engagement: It’s important to encourage active participation in CoPs through regular meetups, workshops and collaborative platforms that support knowledge sharing and problem solving.

Support continuous learning: Offer ongoing training, access to AI tools and resources and give them the opportunity to apply their skills to projects or proofs of concept, keeping Power Users at the forefront of AI innovation.

Be strategic about the impact of Power Users and CoPs: Harness their capabilities and use them to ease user adoption and reduce resistance to change, ensuring AI adoption is aligned with business objectives (record the benefits of using AI in said objectives and track their progress).

Navigate to your next chapter…