vii. Pillar Four: Responsible AI

Pillar Four: Responsible AI

Science fiction abounds with tales of the computer surpassing and, eventually, dethroning the human. In the real world, though, the need to regulate and moderate artificial intelligence is enacted through the discipline of Responsible AI (RAI).

Microsoft has established a series of RAI principles that guide the ethical development and deployment of AI. These principles - Reliability and Safety, Privacy and Security, Fairness and Inclusivity, Transparency, and Accountability - are essential to ensure that AI is used safely within an organization. These principles join the AI Strategy Framework as dimensions in our Responsible AI pillar.

We need to be unequivocal, here, lest organizations foolishly treat RAI as the first thing to be cut when budgets tighten:

Responsible AI is not optional. Omitting it from your AI strategy is, in fact, irresponsible, and exposes the organization to intolerable levels of risk. You must either take RAI seriously or walk away from AI altogether.

This is not to say that RAI is more important than the other four pillars, rather to say that organizations failing to take - for example - workload prioritization seriously are likely to waste time and money. Organizations that don’t take RAI seriously face the possibility of being sued and regulated out of existence.

Microsoft is researching and analyzing various RAI scenarios with the goal of defining risks and then measuring them using annotation techniques in simulated real-world interactions. It’s product and policy teams, and the company’s AI Red Team area group of technologists and other experts who poke and prod AI systems to see where things might go wrong.

Reliability and Safety

AI workloads and their underlying infrastructure, models, and use of data must be reliable and safe in any scenario into which they are deployed. This principle emphasizes the importance of building AI systems that are dependable and secure, capable of functioning correctly under diverse and unforeseen circumstances. It also involves rigorous testing and continuous monitoring to prevent failures and mitigate risks.

For example, AI is increasingly used in healthcare for diagnostic purposes. To ensure reliability and safety, a hospital might implement an AI-based diagnostic tool and conduct extensive testing in controlled environments before full deployment. Continuous monitoring and updates ensure that the tool performs accurately and safely in real-world medical scenarios.

Software was nearly entirely deterministic prior to the advent of generative AI, which is to say that its programming provided for a specific number of defined outcomes from any input it was provided. When new lead is created, check if contact exists. If contact does not exist, create contact.

Deterministic programs can be tested for every possible outcome, because the outcomes can be quantified and defined.

Modern AI is largely non-deterministic, meaning that the program chooses its own path, its own adventure if you will, each time that it is run. Responses, even to an identical prompt, vary each time the prompt is given and the response is generated.

Let’s illustrate this non-deterministic phenomenon with an innocent example.

In writing this chapter, we provided Microsoft Copilot with the following simple prompt:

Please paint me a picture of a lighthouse.

Copilot returned a response several seconds later, generated by Microsoft Designer using DALL-E 3.

We then repeated the same prompt, only to have Copilot return a different generative image.

Figure 26: Copilot then returned a different image when prompted again just a minute later.

Figure 25: The first painting of a lighthouse that Microsoft Copilot returned.

All of which is to say that reliability and safety - and really all five RAI dimensions - are not things that can be deterministically tested for in advance and then left to run on their own. RAI requires ongoing monitoring, correction and tuning, and testing again to produce responses that are ever more aligned to RAI principles. It also requires risk tolerance to the reality that AI will make it mistakes, it will produce irresponsible responses. All the more reason for the organization’s collective digital literacy to be attended to, so that humans are able to recognize these errors and take part in continually refining the AI workloads with which they interact.

Privacy and Security

AI systems must be designed to protect individual privacy and ensure data security. This dimension focuses on safeguarding personal data against unauthorized access and misuse. It involves implementing robust security measures and ensuring transparency about data collection, usage, and storage practices.

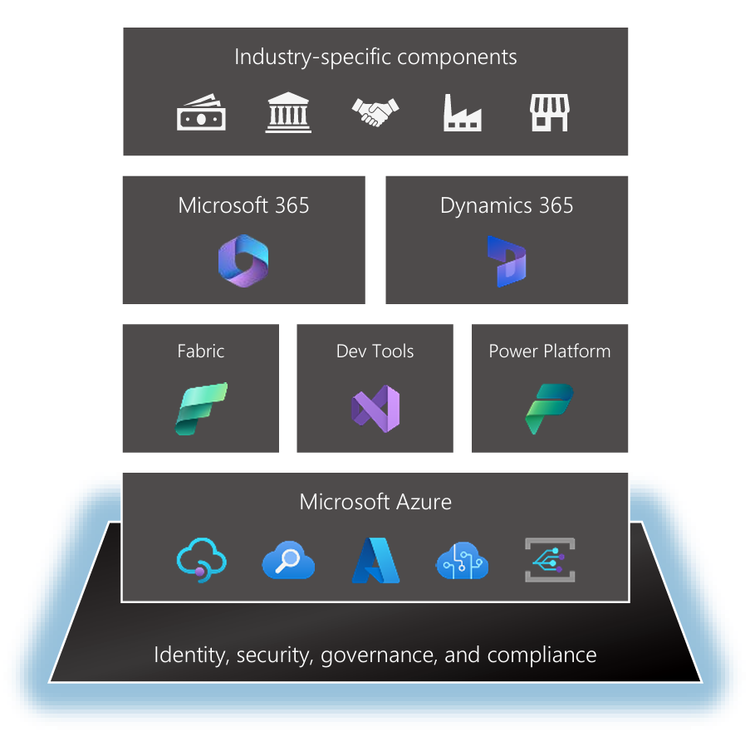

Figure 27: Microsoft’s major platforms or product families build upon one another. Fabric, Dev Tools, and Power Platform sit atop Azure services, and in turn Microsoft 365, Dynamics 365, and the industry-specific components are end-user applications dependent on the platform services sitting beneath them.

Consider a smart home device that uses AI to learn and adapt to the user's preferences. To adhere to privacy and security principles, the manufacturer must encrypt all data transmissions, provide clear information on data usage, and give users control over their data. Regular security updates and vulnerability assessments also help protect user privacy.

Organizations that have robustly implemented Microsoft Azure across their cloud ecosystem have an in-built advantage here.

You’ve likely seen a version of this building blocks diagram if you’ve been paying attention to Microsoft marketing over the last several years, but allow us to direct your attention to the foundational “identity, security, governance, and compliance” layer. Data security is emerging as one of the strongest cases to be made in favor of adopting a Microsoft-centric ecosystem rather than piecing together “best of breed” ecosystems from amongst disparate software vendors. Just as Apple has been able to create highly integrative user experiences by controlling its consumer technology end-to-end, so too do we believe Microsoft will increasingly create highly integrative data security experiences by (more or less) controlling its data platform technology end-to-end.

Privacy and security has been a significant area where existing infrastructure has raced to keep up with the evolution of generative AI technology in recent years. No technology company has a silver bullet, but in integrating both the security infrastructure and the data platform sides of the coin, Microsoft has made significant strides through its investments in technologies including Entra ID (formerly Azure Active Directory), Purview for data governance, the security models built into technologies like Dataverse and OneLake, and more. We’re far from the promised land of a fully integrated security model across the entire data estate, but we’re getting there. This is an incredibly exciting (albeit likely thought of as quite niche) area to watch in the coming years.

Fairness and Inclusivity

Fairness and Inclusivity are two highly related yet subtly different concepts.

The more subjective of the two is fairness, which we’ll define here as the importance of treating everyone the same, including bias against disability. Fairness in AI means that systems should treat all people equally and equitably, without bias or discrimination. Notoriously difficult to quantify, ensuring fairness involves identifying and eliminating biases in AI systems that might lead to unfair treatment of individuals based on attributes such as race, gender, age, or other protected characteristics. It is essential for maintaining trust and social harmony.

A typical employee hiring process offers a real-world example of this principle. Imagine a company using an AI-based recruitment tool. To ensure fairness, the company must regularly audit the AI system for biases and make necessary adjustments. For example, if the system favors candidates from certain demographics over others without a valid reason, the algorithms need to be revised to eliminate such biases.

Age, interestingly, has become quite a common manifestation of the fairness problem in AI wherein models are unduly influenced by the extraordinary amount of internet content produced by members of younger generations, and inadvertently favor members of those generations in processes such as hiring.

Inclusivity is relatively more straight forward to quantity and measure, ensuring that AI systems are accessible and beneficial to a diverse range of people, including those with disabilities. This principle underscores the importance of designing AI technologies that are usable by people from all backgrounds and abilities. It promotes equal access to AI's benefits and encourages diverse perspectives in AI development.

For example, consider the development of AI-powered language translation tools. By supporting multiple languages and dialects, these tools enable people from different linguistic backgrounds to communicate more effectively. Adding features such as voice recognition for people with speech impairments further enhances inclusiveness.

Transparency

Transparency involves making AI systems understandable and providing clear information about how they operate. This principle highlights the need for openness about AI decision making processes, including that users ought to be informed about how AI systems work, the data they utilize, and the algorithms they employ. Transparency fosters trust and accountability.

In the financial sector, AI algorithms are often used for credit scoring. Adhering to transparency principles might cause a bank to provide customers with detailed explanations of how their credit scores are calculated, including the factors considered and their respective weights. This helps customers understand and trust the AI workload and its underlying infrastructure, models, and grounding data.

Accountability

Accountability ensures that organizations and individuals are responsible for the outcomes and responses produced by their AI workloads. This principle emphasizes the need for clear lines of responsibility and mechanisms for addressing issues that arise from AI deployment. Organizations must be prepared to acknowledge and take corrective action when AI systems cause harm or operate incorrectly.

Further, corrective actions must be timely. In other words, organizations must be resourced such that they are able to resolve harmful, incorrect, or other issues that run counter to the RAI principles which ought to be treated with the urgency of a critical error in a Tier 1 core business system. The programmatic and technical proficiency to diagnose, triage, and act on these resolutions is an absolutely core competency for any organization deploying AI tools.

The need for accountability can be seen, for example, in self-driving cars. If an autonomous vehicle is involved in an accident, there must be a framework to determine responsibility. The car manufacturer would need to have processes in place to investigate the incident, address any faults in the system, and provide appropriate remedies to those affected. FRAMEWOR

Navigate to your next chapter…