v. Pillar Two: Ecosystem Architecture

Pillar Two: Ecosystem Architecture

Think about the way most IT has evolved over the course of several decades. For much of this history, organizations acquired software by ginning up a specific need or “use case,” which was often followed by a basket of requirements pertaining to that use case either given to a team for development or turning into a procurement. Infrastructure, whether on-premise or cloud, was then deployed to accommodate the specific, forthcoming, solution.

Ecosystem-oriented architecture (EOA) inverts this approach. Ecosystem architects seek first to build a cloud ecosystem, that is, a collection of interconnected technical services that are flexible or “composable,” Ecosystem Architecture Core Platform Services Data Distribution Integration Business Applications AI Development Tools re-usable, and highly scalable. The ecosystem then expands, contracts, and is adapted over time to accommodate the workloads deployed within it.

EOA is ideal for scaling AI because it promotes data consolidation as a first principle, avoiding the de-consolidation that point solutions tend to promote via the use of data services tied specifically to the application itself and point-to-point data integrations with other point solutions.

Earlier we shared a sample ecosystem map (see the Ecosystem Map dimension in the Strategy and Vision pillar). Let’s now dig into the concept of EOA a bit further, as it is essential to understanding the Ecosystem Architecture pillar.

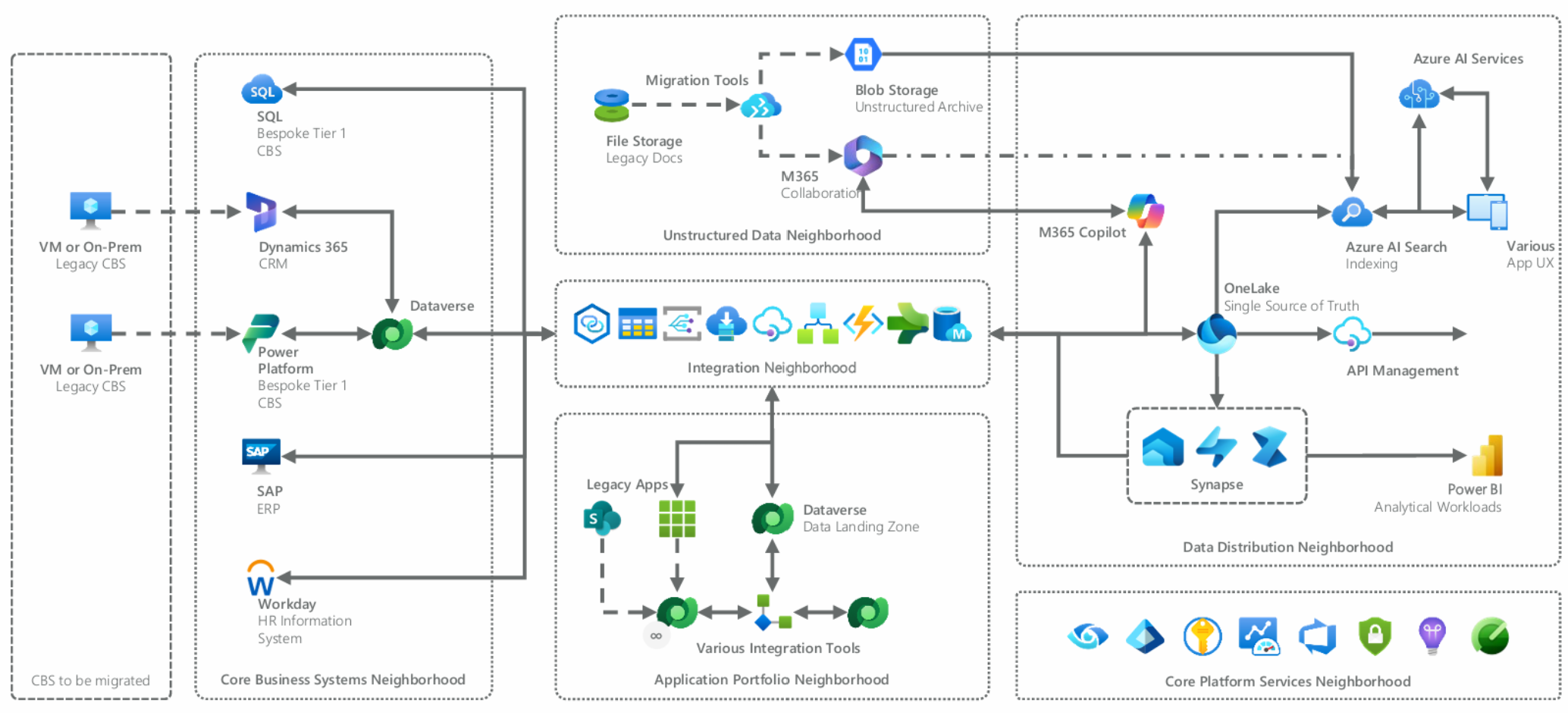

We’ve created what we call the “Reference Ecosystem”, essentially a composite of dozens of enterprise organizations’ cloud ecosystems that we’ve studied across many industry sectors and geographies.

Figure 9: The “Reference Ecosystem” is a composite of ecosystem-oriented architecture (EOA) across many different organizations in many different industries. Consider it the “North Star” for an effective EOA across and entire cloud estate and use it as a reference to build your own.

To orient you, the map makes a clear analogy between the cloud ecosystem and a city divided into “neighborhoods.” These neighborhoods are conceptual, in other words, one should not necessarily construe them as hard logical boundaries such as environments, subscriptions, or tenants. Rather, the ”neighborhood” concept helps us understand the categories, relationships, and boundaries of technical services and workloads, in what can be a vast cloud ecosystem, in a relatable, clear way.

Adopting an ecosystem-oriented architecture across an enterprise organization supports your AI strategy in many ways. Fully adopted EOA is the pinnacle of many of the strategies we’ve already discussed, the enterprise architecture “North Star,” if you will, in the era of AI:

Use this Reference Ecosystem to orient you to the first four dimensions of our Ecosystem Architecture pillar, specifically Core Platform Services, Data Distribution, Integration, and Business Applications (which combines the Core Business Systems and Application Portfolio neighborhoods shown in the reference);

EOA speeds the deployment of AI workloads, creating the conditions for those “quick wins” that many think they can achieve with AI only to find out that they’ve not done the work necessary around data consolidation, readiness, and scaling to make this work. Time to value is much shorter when your data is already consolidated, indexed, governed, secured, and you have supporting services such as application lifecycle management ALM (and MLOps) in place;

EOA organizes and integrates “traditional” workloads found primarily in the Core Business Systems, Application Portfolio, and Unstructured Data neighborhoods in a way that supports AI workloads downstream. This integration mitigates the struggles many will have preventing new silos of unconsolidated data emerging as they scale;

Undertaking a transition to ecosystem-oriented architecture aligns well with the two core principles that underpin the AI strategy:

Your AI strategy must be flexible, able to absorb tomorrow what we don’t fully grasp today . Cloud ecosystems are designed to be metaphorically living, breathing entities that evolve to meet today’s needs and achieve the promise of future innovation;

Your strategy should offer value to the organization beyond specific AI-driven workloads because the nature and value of these workloads will remain unclear for some time. Your transition to EOA is a great investment in AI, but also brings value to the organization in terms of time to value, resolution of technical debt, retirement of legacy licensing and capacity costs, reduction of organizational risk around data governance and security, and in the form of workloads such as search, outside integration, analytics, and reporting.

Core Platform Services

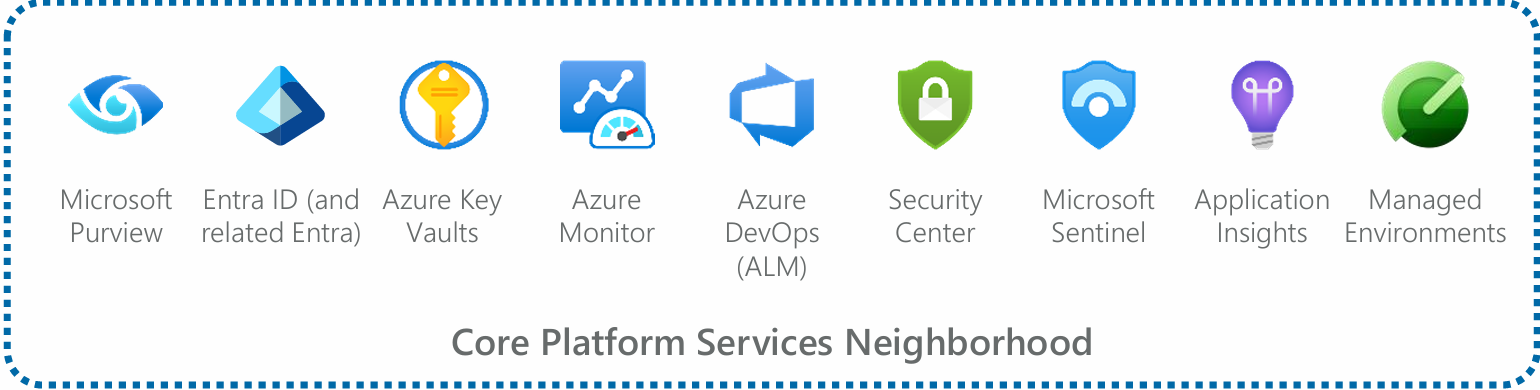

Core Platform Services include many of the infrastructure, security, governance, management, and monitoring services used across a cloud ecosystem. Largely synonymous with a “cloud landing zone”, the Reference Ecosystem shows (left to right) Purview, Entra ID (formerly Azure Active Directory), Key Vaults, Azure Monitor, Azure DevOps (ADO), Security Center, Sentinel, Application Insights, and Power Platform Managed Environments as examples. There will surely be others, but we have chosen these as representative “core services” that nearly every modern cloud ecosystem should contain to technically support an organization’s AI strategy.

Figure 10: A magnified view of the Core Platform Services Neighborhood from the Reference Ecosystem.

Core Platform Services, and Microsoft Purview Managed Environments in particular, also play a vital role in data governance, which refer to measures taken to secure, govern, cleanse, establish lineage and compliance, manage metadata, etc. In other words, have you established the conditions for your data to produce quality responses when consumed by AI?

Though establishing baseline data governance through Microsoft Purview is very much a part of your core platform services, it’s too important a topic to hide away. So, we’ve broken it into its own dimension which we’ll discuss separately as part of the Scaling AI pillar later in the paper.

In any case, our core platform services approach to data readiness involves good, old fashioned best practices around building and maturing your cloud landing zone and the ongoing maturation of your cloud estate. Given the convergence of data platform infrastructure and business applications, this must necessarily include deployment of typical Azure as well as Power Platform security and infrastructure measures. Here we’re talking about nuts-and-bolts capabilities such as identity management via Microsoft Entra ID (formerly “Azure Active Directory”), as well as services that are less well-known outside of the circles of technologists who specialize in them. Examples of these are Key Vaults, Azure Monitor, Security Center, Managed Environments, etc. The essential task here is to mature your cloud infrastructure in accordance with industry best practices, with an emphasis on data security and governance.

Technical documentation on this subject abounds, so we’ll not overextend this discussion. The real question that every organization must answer in evaluating its current state and direction for core platform services is whether it has built a broadly based cloud landing zone that provides for the technical availability, management, and governance of its cloud infrastructure (Azure, in the case of Microsoft), data platform (Fabric and related services), and business applications (Power Platform, Dynamics 365, and Azure application services), as well as the application lifecycle management (ALM) necessary to shepherd both traditional and AI-based workloads to production.

Navigate to your next chapter…